🐾Designing for failure in serverless applications🐾

🤓 Serverless often feels like magic—no servers to manage, effortless auto‑scaling, and you pay only when things run. But that simplicity can lull you into a false sense of security. Behind the scenes, you're still building on distributed systems—and yes, things will fail. It’s not about "if," it’s about "when."

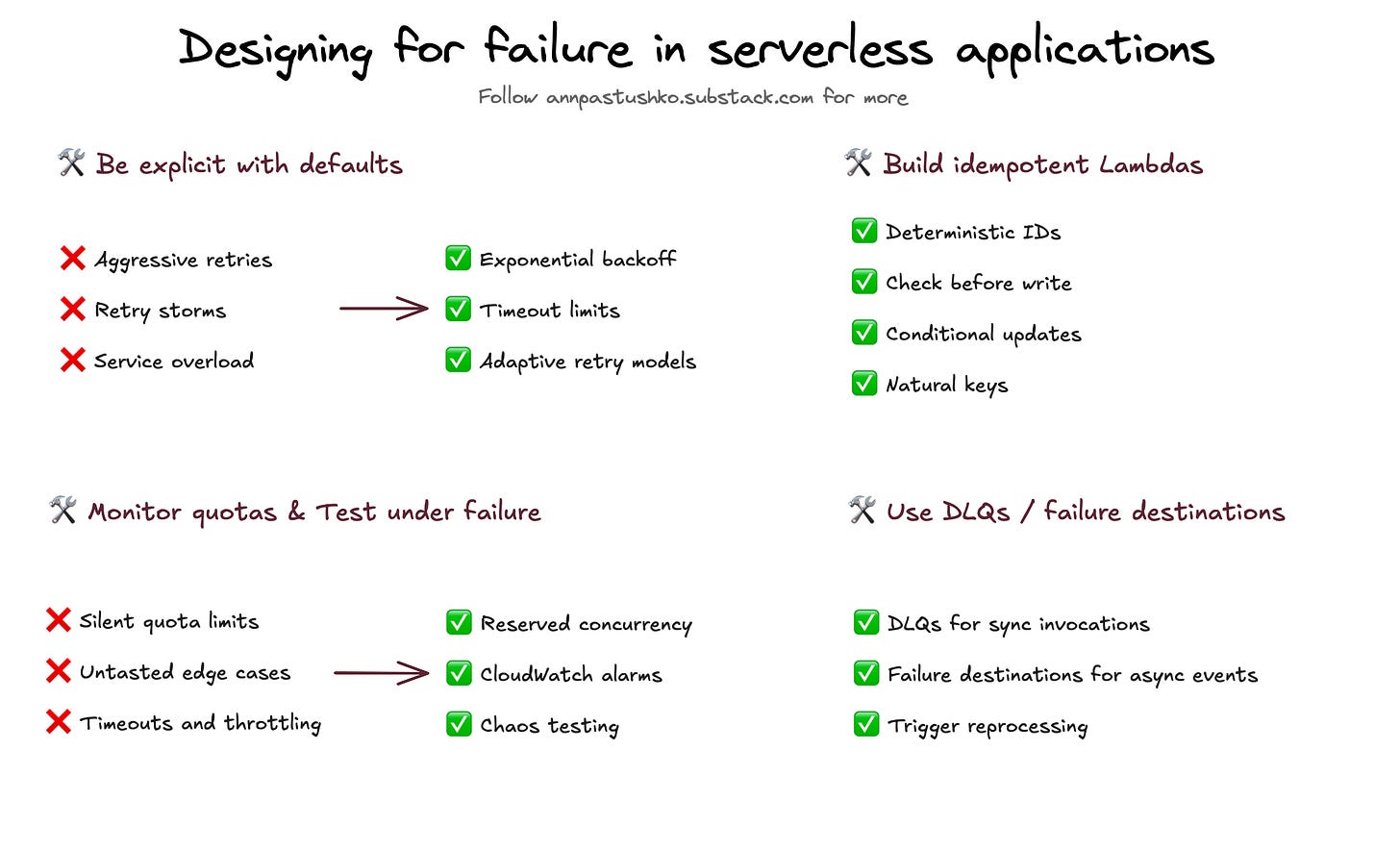

🛠️ Be explicit with defaults

Don’t trust defaults to be production‑ready. SDKs like AWS SDK automatically retry failed requests (like throttles or 5xx errors) using quite aggressive retry logic, which can cause retry storms. Configure retry limits, use exponential backoff with jitter, and make timeouts explicit—always set timeout, maxAttempts, and retryMode in the AWS SDK. For Lambda, set function timeouts slightly higher than downstream timeouts to avoid zombie executions.

🛠️ Build idempotent Lambdas

Lambdas will be retried—by design. If your function writes to a database, publishes an event, or calls an external service, ensure that retrying doesn’t lead to duplicates or inconsistent state. Use deterministic request IDs or natural keys (e.g., order_id) to make writes safe. Consider a “check‑before‑write” pattern or conditional updates in DynamoDB (ConditionExpression). Aim for at-least-once delivery with exactly-once effects. If you want to learn more, this is absolute must-read.

🛠️ Use DLQs / failure destinations

Unrecoverable errors shouldn’t block your pipeline. Configure Dead Letter Queues (for sync invocations) or Failure Destinations (for async ones) to isolate failed events and trigger alerts or reprocessing. For example, if a Lambda fails to process an SQS message after max retries, you can route it to another SQS queue or SNS topic for manual handling or monitoring. Always track what’s failing and why.

🛠️ Monitor quotas

Most serverless services have soft limits. Lambda concurrency, SQS throughput, DynamoDB write capacity, Step Functions state transitions—all can hit limits silently. Use CloudWatch metrics and create alarms for utilization and throttles. Split workloads into separate AWS accounts or use reserved concurrency to isolate critical paths. Proactively request quota increases before you need them.

🛠️ Test under failure

Designing for failure means testing for it too. Simulate timeouts and throttling using tools like AWS Fault Injection Service or by injecting controlled exceptions into test environments. Watch how your retries behave—do they back off, or do they create a retry storm? Do failures surface with enough context to act on them? Chaos testing should be part of your test plan, not an afterthought.

Thank you for reading, let’s chat 💬

💬 What's the most painful serverless failure you've experienced?

💬 What's your go-to chaos engineering tool for testing serverless apps?

💬 Are you using AWS Fault Injection Service, custom scripts, or something else?

I love hearing from readers 🫶🏻 Please feel free to drop comments, questions, and opinions below👇🏻