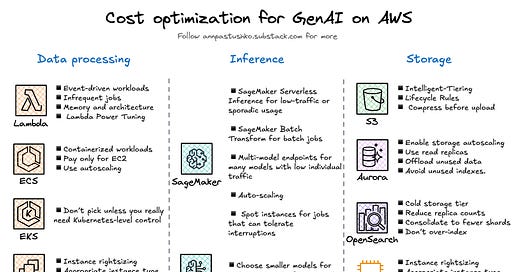

🤓 Over the past few months, I’ve received many questions about cost optimization for Generative AI workloads. While it may seem like GenAI requires an entirely new strategy, the truth is that most of the well-known AWS cost optimization techniques apply here just as well. Let’s break down these techniques according to the GenAI pipeline components.

⚙️Data pre- and post processing

For tasks like prompt cleanup, format conversion, or output ranking, you’re probably using EC2, ECS, or Lambda. Choose wisely—this alone can save you a lot.

Lambda is perfect for event-driven or infrequent jobs. Use Lambda Power Tuning to find the optimal memory/timeout combo. Try ARM architecture for cheaper compute.

ECS on EC2 is cost-efficient for steady container workloads. You only pay for EC2, and container orchestration is free.

EKS adds management overhead and per-cluster fees—don’t pick it unless you really need Kubernetes-level control.

EC2: Use Auto Scaling and make sure your instances aren’t oversized. Switch to Spot instances for non-critical batch jobs. You can read more about EC2 rightsizing in the blog post below.

💡 Bonus tip: Use autoscaling for ECS and EC2 to save cost during non-peak hours.

🧠Model inference

This is usually the most expensive part of the pipeline. Be intentional.

Need real-time? Use SageMaker Serverless Inference for low-traffic or sporadic usage—it scales to zero.

For batch jobs, SageMaker Batch Transform is way cheaper than keeping an endpoint always-on.

Multi-model endpoints let you host several models behind one SageMaker endpoint—great for many models with low individual traffic.

Always enable auto-scaling, and set low minimums during off-hours.

Use Spot instances for training or async inference jobs that can tolerate interruptions.

If using Bedrock, choose smaller models like Claude Instant or Mistral for cost-sensitive tasks—smaller can still be effective when prompts are optimized. You can find more Bedrock cost optimization tips in this blogpost.

💡 Compare pricing across foundation model providers. Sometimes a fine-tuned small model performs better and costs less than a general-purpose giant.

💾 Storage

Storage sneaks up on you in GenAI—especially when you're dealing with training data and embeddings.

Training data in S3:

Use S3 Intelligent-Tiering to automatically move cold data to cheaper storage.

Apply S3 Lifecycle Rules to archive or delete outdated versions.

Compress large datasets before upload.

Embeddings / RAG context storage:

While using OpenSearch consider Cold storage tiers for rarely accessed embeddings. For non-latency-critical RAG workloads, you can reduce replica counts to cut costs, though this affects fault tolerance. Consolidate to fewer shards where possible to reduce management overhead. Don’t over-index — only create fields you query on, as every index adds to cost.

In case you use Amazon Aurora, enable storage autoscaling to avoid overprovisioning. Use read replicas for heavy query loads and scale them independently. Regularly offload unused data, and avoid unused indexes.

If you use self-managed vector DBs on EC2 (e.g., FAISS, Qdrant, Milvus), apply standard EC2 cost-optimization technics: instance rightsizing, choose appropriate instance type, consider using Saving plans.

Thank you for reading, let’s chat 💬

💬 Do you know any other GenAI cost optimization tips?

💬 Are you concerned about the cost of your GenAI workloads?

💬 Which component is the biggest cost driver in your pipeline?

I love hearing from readers 🫶🏻 Please feel free to drop comments, questions, and opinions below👇🏻

Great article with insightful advices, thanks for the sharing. I also believe AI can do lots of things on the cost optimization and I’m building something around it.